Comparative testing of PHP frameworks

When developing any software product, first of all, developers should choose the software platform correctly, which determines the structure of the software system. To do this, you should to take into account a sufficiently large number of characteristics, starting with “How quickly will everything work?” and ending with “Do we need this feature?” and so much more in between. It is precisely at the time of brainstorming that the team compares easement of the framework, speed, and the setoff features implemented in it or in modules compatible with it.

But which one is better, faster, and more productive?

Developers constantly compare frameworks to clarify this issue for themselves. For example, the comparison of PHP frameworks was cited in the article Lukasz Kujawa. This website was used for performance evaluation of frameworks.

PHP Framework Benchmark offers a lot of frameworks (not just the ones mentioned above) for comparison, but the author is in no hurry to add new versions of projects to the repository. This is, of course, sad, but not fatal. It is not difficult to add a new version if you wish.

One of the main goals of this article is an attempt to determine the improvements in production and effectiveness of new versions of PHP in practice. The testing was conducted in PHP for versions 5.6/7.0/7.1 for this purpose.

What will we compare?

The following frameworks were chosen for comparison:

- slim-3.0

- ci-3.0

- lumen-5.1

- yii-2.0

- silex-1.3

- fuel-1.8

- phpixie-3.2

- zf-2.5

- zf-3.0

- symfony-2.7

- symfony-3.0

- laravel-5.3

- laravel-5.4

- bluz (the version 7.0.0 for РНР5.6 and the version 7.4 for РНР 7.0 and above)

- ze-1.0

- phalcon-3.0

The testing is conditionally divided into four types:

- throughput

- memory

- exec time

- included files

Testing methodology and the test rig

The machine on which this test was produced has the following characteristics:

- Operation system: Linux Mint 17 Cinnamon 64-bit

- Cinnamin Version: 2.2.16

- Linux Kernel: 3.13.0-24-generic

- Processor: Intel Core i3-4160 CPU 3.60Ghz X 2

- Memory: 8 GB

- Server version: Apache/2.4.7 (ubuntu)

- Server build: Jul 15 2016

- php7.1/php7.0/php5.6

The command git clone and the frame is already on our machine. Since we used Mint, the following setting needs to be done:

# Added

net.netfilter.nf_conntrack_max = 100000

net.nf_conntrack_max = 100000

net.ipv4.tcp_max_tw_buckets = 180000

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_fin_timeout = 10

sudosysctl -p

A little about the structure of php-framework-benchmark:

/benchmarks – it contains bash scripts that are responsible for information gathering about request quantity per second (by means of ab utility), the amount of information, how much time was spent, and how many files were taken from the file “starting point.”

Lib – directory, where there are files responsible for processing of information obtained after the output of the page “Hello, world,” table output with results, and diagram building.

/output – directory, where logs are added after the testing. There are two files for every tested file <name>.ab.log which is the log after the work of ab utility and the file “name”.output which contains information that was displayed (usually, hello world, data memory, execution time, included files).

The remaining folders are workpieces of frames where there is one controller that will return the line “hello, world” when referring to uri, compiled according to the rules of reference for this framework. You need to set frameworks for the launch of the test. You should consider two approaches.

The team bash setup.sh will set those frameworks described in the file list.sh.—you can edit it, add or delete the necessary folders for testing. In general, you can create the configuration you need.

By means of bash setup.sh fatfree-3.5/slim-3.0/lumen-5.1/silex-1.3/, you can install some separate frameworks by specifying parameters to the command. After setting up the frameworks, we launched the testing using bash benchmark.sh.

After finishing the work in the terminal, we saw the table with the list of frameworks that we had tested (request quantity per second, relative importance, peak memory, and also the relative importance of these indicators).

As you can understand, it is necessary to set the Apache and make it look in the folder with the frame. Again, the author in readme describes all of this and tells us what we need, so there are no issues regarding this.

Test results of the frameworks

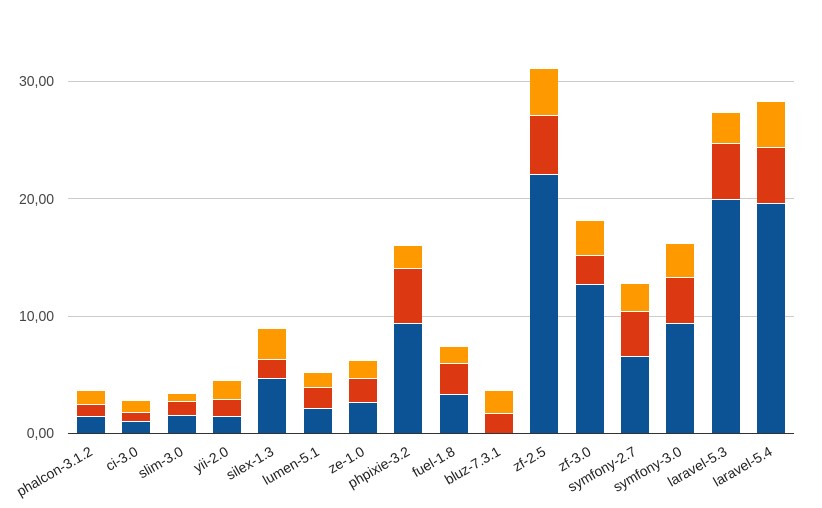

Each section has a structure consisting of two forms of presenting the results.

The first form is a visual type of representation. Each characteristic contains four diagrams. Each diagram displays a comparison of the above-mentioned frameworks among themselves plus a storage diagram. The diagram was obtained using a specific version of PHP. Thus, you can trace the evolution of improvements in PHP and frameworks.

The second form is the test result in the form of a table (enough visibility, let’s talk seriously—give me more numbers!).

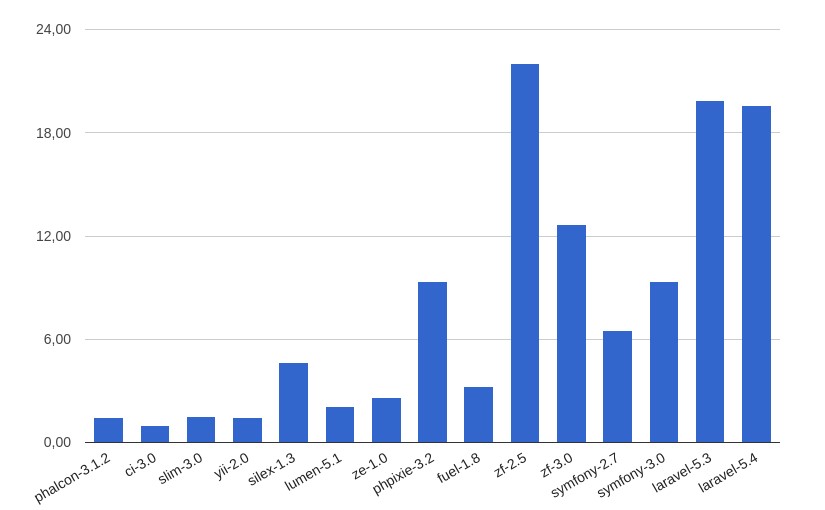

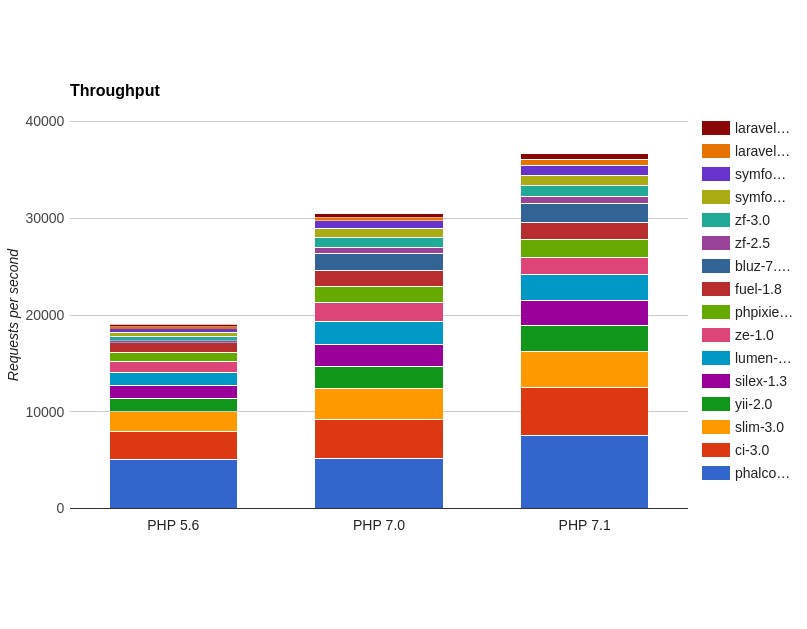

Throughput

In general terms, throughput is the maximum rate of production or the maximum rate at which something can be processed.

Applying this to our situation, this characteristic is measured in the request quantity that our framework can process within a second. Therefore, the higher this number is, the more productive our application is, as far as it is able to process queries of a large number of users correctly.

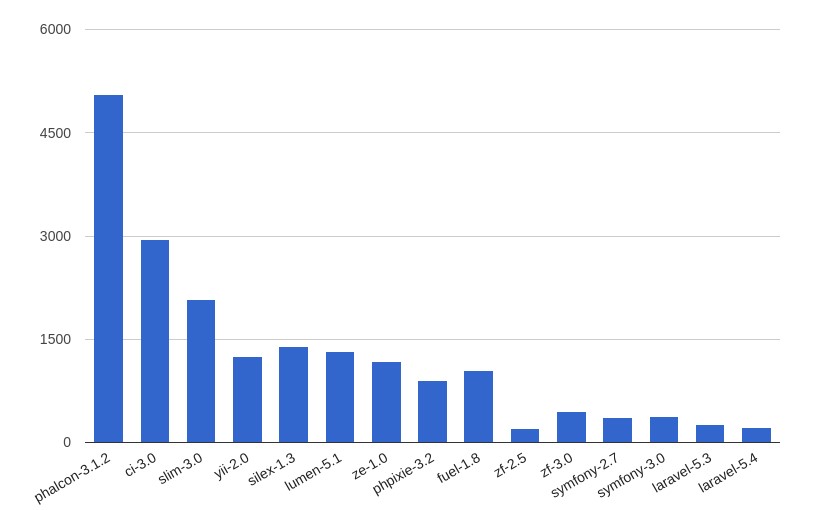

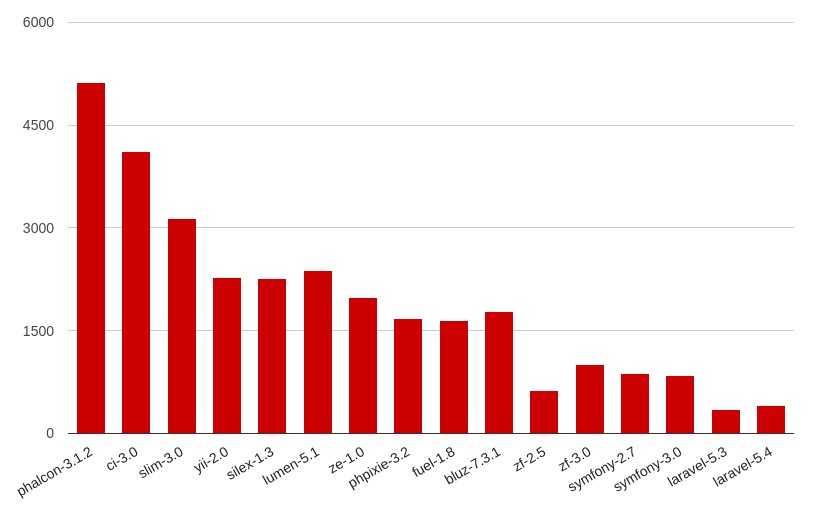

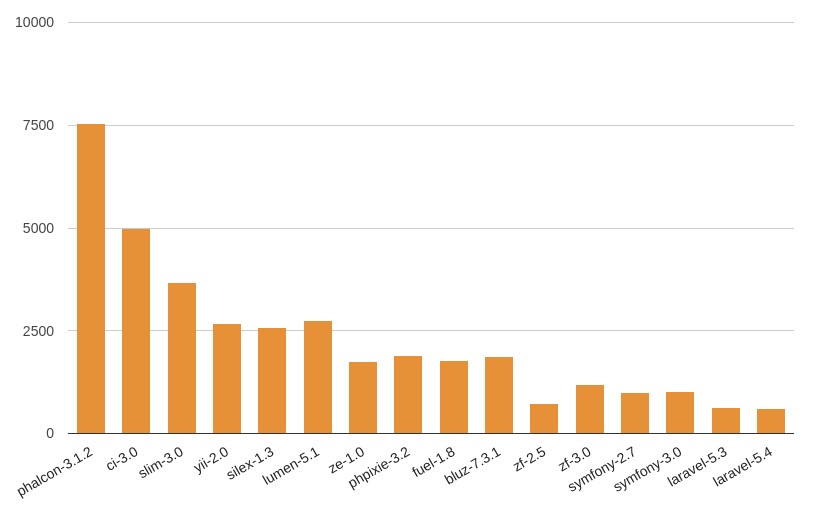

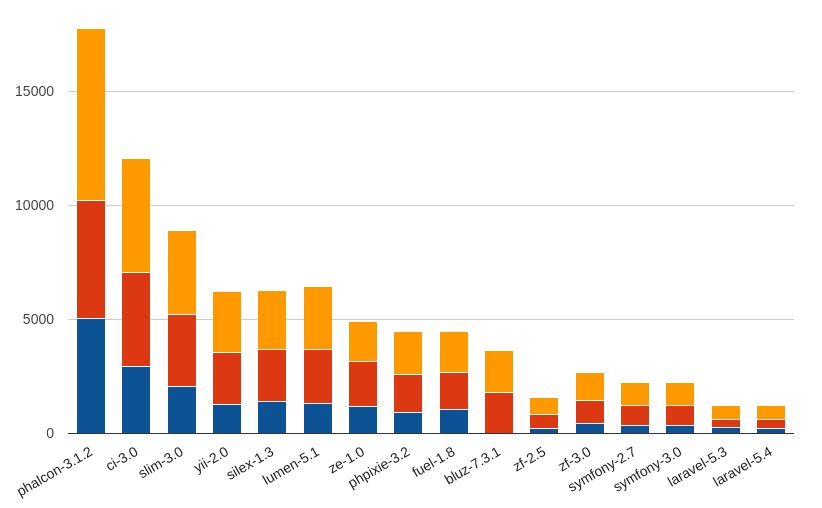

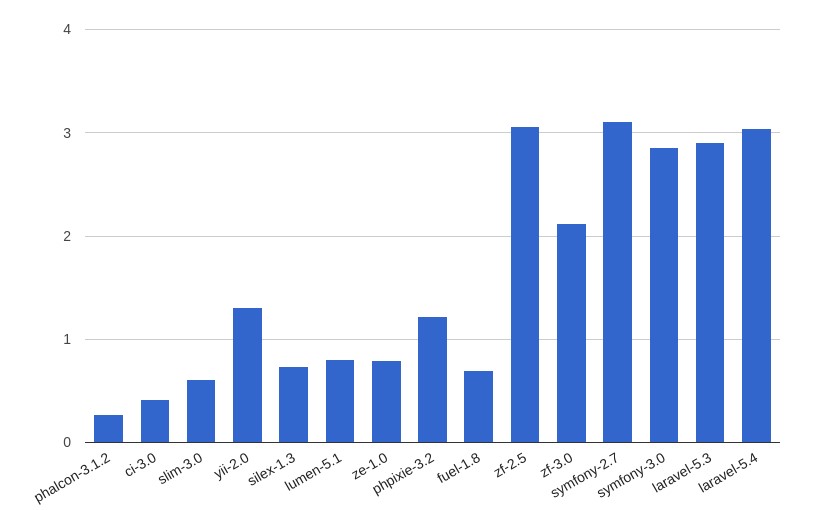

As a result of the tests we received the following data:

| Requests per second | |||

| php5.6 | php7.0 | php7.1 | |

| phalcon-3.1.2 | 5058.00 | 5130.00 | 7535.00 |

| ci-3.0 | 2943.55 | 4116.31 | 4998.05 |

| slim-3.0 | 2074.59 | 3143.94 | 3681.00 |

| yii-2.0 | 1256.31 | 2276.37 | 2664.61 |

| silex-1.3 | 1401.92 | 2263.90 | 2576.22 |

| lumen-5.1 | 1316.46 | 2384.24 | 2741.81 |

| ze-1.0 | 1181.14 | 1989.99 | 1741.81 |

| phpixie-3.2 | 898.63 | 1677.15 | 1896.23 |

| fuel-1.8 | 1044.77 | 1646.67 | 1770.13 |

| bluz-7.3.1 | – * | 1774.00 | 1890.00 |

| zf-2.5 | 198.66 | 623.71 | 739.12 |

| zf-3.0 | 447.88 | 1012.57 | 1197.26 |

| symfony-2.7 | 360.03 | 873.40 | 989.57 |

| symfony-3.0 | 372.19 | 853.51 | 1022.28 |

| laravel-5.3 | 258.62 | 346.25 | 625.99 |

| laravel-5.4 | 219.82 | 413.49 | 600.42 |

* – bluz-7.3.1 does not support php5.6

For illustrative purposes, we built graphics for each version of PHP:

PHP 5.6

PHP 7.0

PHP 7.1

Summary storage diagram (by frameworks)

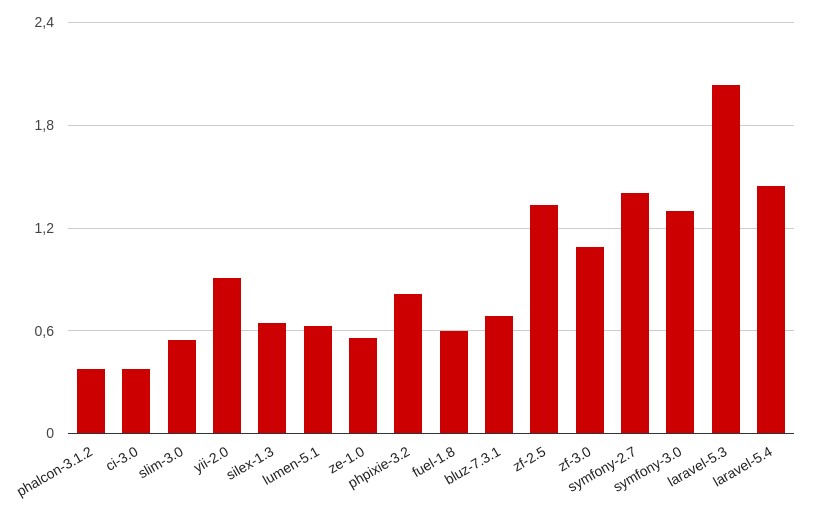

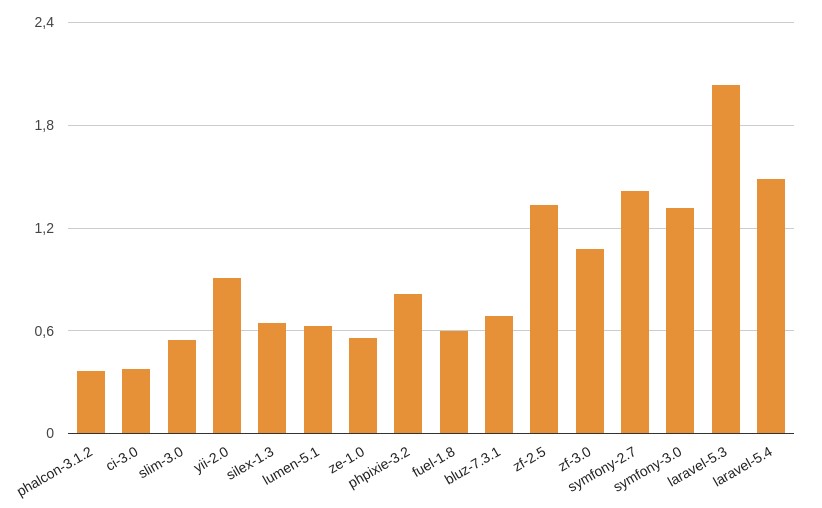

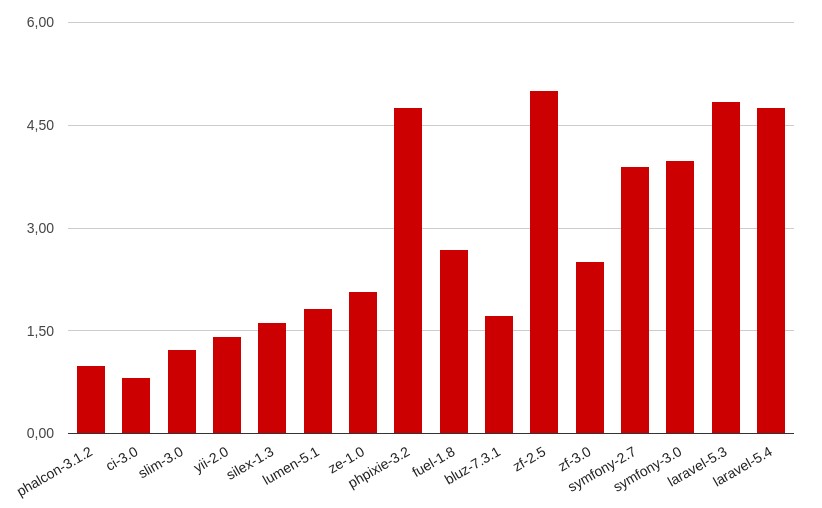

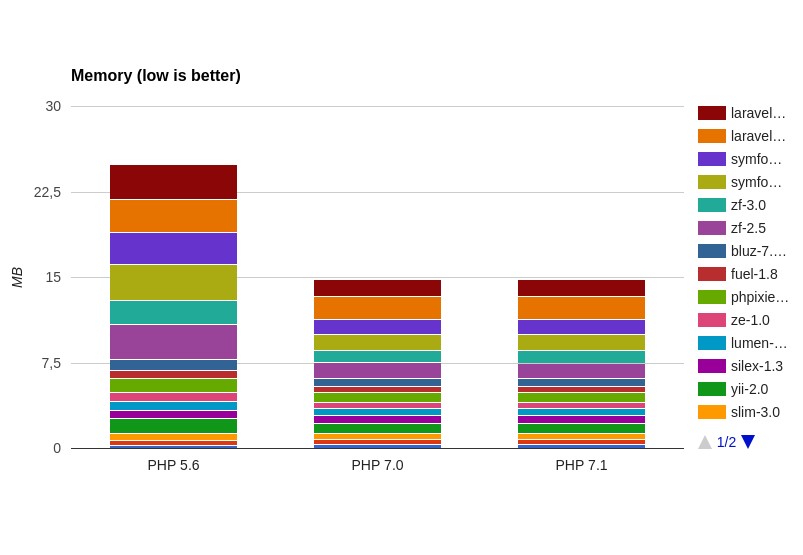

Peak memory

This characteristic (in MB—megabytes) is responsible for the amount of peak memory utilized by the framework in fulfilling the task entrusted to it, therefore, the lower this number, the better it is for us and the server:

| c | Peak memory (MB) | ||

| php5.6 | php7.0 | php7.1 | |

| phalcon-3.1.2 | 0.27 | 0.38 | 0.37 |

| ci-3.0 | 0.42 | 0.38 | 0.38 |

| slim-3.0 | 0.61 | 0.55 | 0.55 |

| yii-2.0 | 1.31 | 0.91 | 0.91 |

| silex-1.3 | 0.74 | 0.65 | 0.65 |

| lumen-5.1 | 0.80 | 0.63 | 0.63 |

| ze-1.0 | 0.79 | 0.56 | 0.56 |

| phpixie-3.2 | 1.22 | 0.82 | 0.82 |

| fuel-1.8 | 0.70 | 0.60 | 0.60 |

| bluz | – * | 0.69 | 0.69 |

| zf-2.5 | 3.06 | 1.34 | 1.34 |

| zf-3.0 | 2.12 | 1.09 | 1.08 |

| symfony-2.7 | 3.11 | 1.41 | 1.42 |

| symfony-3.0 | 2.86 | 1.30 | 1.32 |

| laravel-5.3 | 2.91 | 2.04 | 2.04 |

| laravel-5.4 | 3.04 | 1.45 | 1.49 |

* – bluz-7.3.1 does not support php5.6

And graphics:

PHP 5.6

PHP 7.0

PHP 7.1

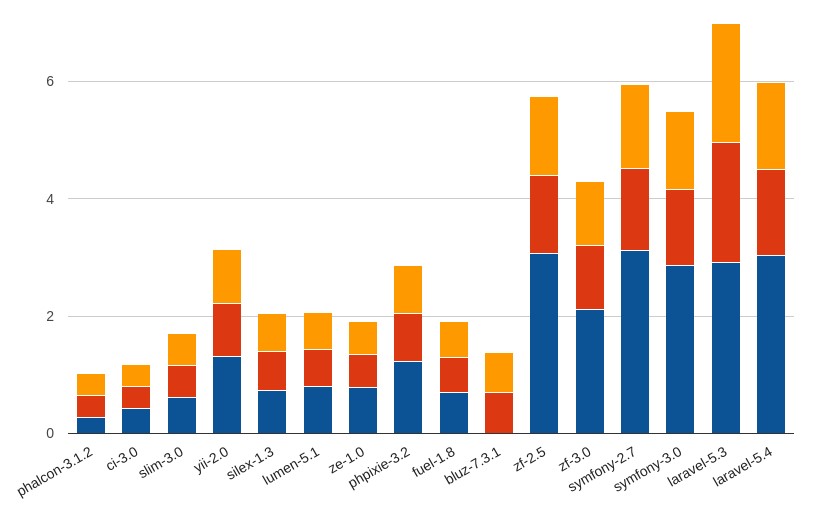

Summary storage diagram (by frameworks)

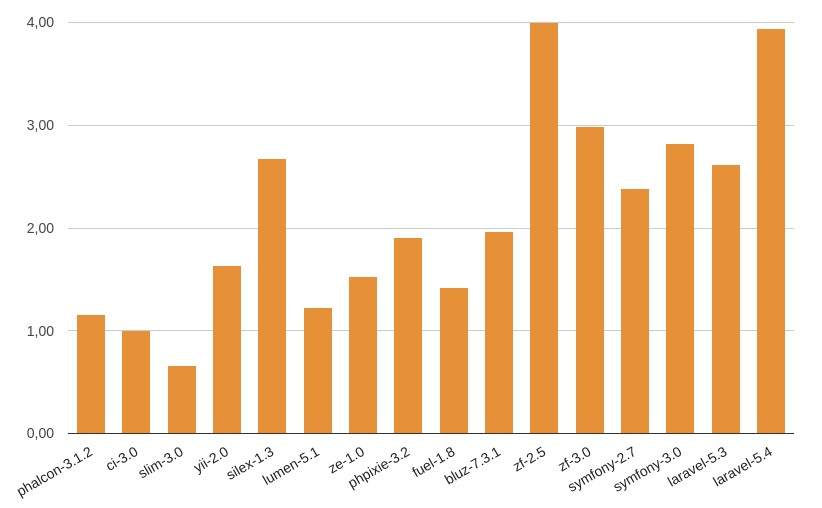

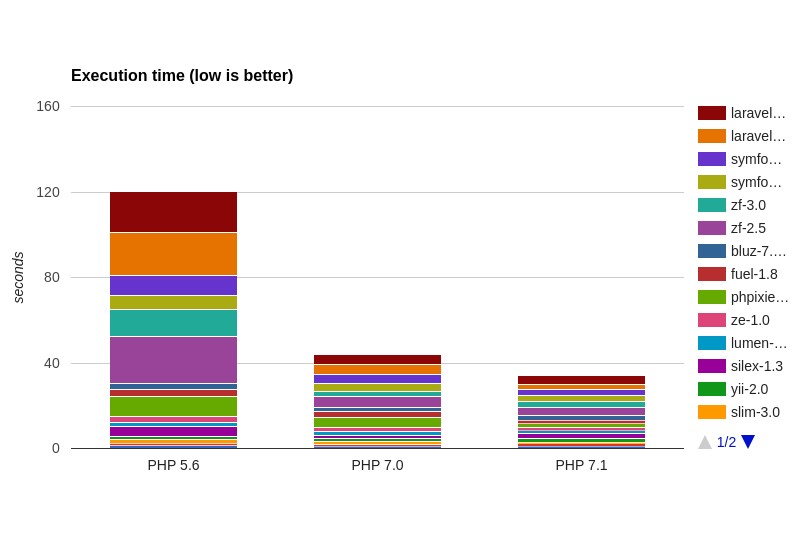

Execution time

Execution time is the time that the system takes to complete the task. Time is measured from the beginning of the task execution until the system gives the result.

Calculating this is quite simple. We have found out how many requests per second the framework can process, and how much memory it takes. Now let us consider how much time it will take to get a response from the server. Again, it is logical that the lower this value is, the better it is for us, and for the nervous system of the client of our application.

You can see the comparison table of request execution time on different frameworks when using the different versions of PHP. The time is in milliseconds (ms).

| Execution time (ms) | |||

| php5.6 | php7.0 | php7.1 | |

| phalcon-3.1.2 | 1.300 | 1.470 | 1.080 |

| ci-3.0 | 0.996 | 0.818 | 1.007 |

| slim-3.0 | 1.530 | 1.228 | 0.662 |

| yii-2.0 | 1.478 | 1.410 | 1.639 |

| silex-1.3 | 4.657 | 1.625 | 2.681 |

| lumen-5.1 | 2.121 | 1.829 | 1.228 |

| ze-1.0 | 2.629 | 2.069 | 1.528 |

| phpixie-3.2 | 9.329 | 4.757 | 1.911 |

| fuel-1.8 | 3.283 | 2.684 | 1.425 |

| bluz | – * | 1.619 | 1.921 |

| zf-2.5 | 22.042 | 5.011 | 3.998 |

| zf-3.0 | 12.680 | 2.506 | 2.989 |

| symfony-2.7 | 6.529 | 3.902 | 2.384 |

| symfony-3.0 | 9.335 | 3.987 | 2.820 |

| laravel-5.3 | 19.885 | 4.840 | 2.622 |

| laravel-5.4 | 19.561 | 4.758 | 3.940 |

And graphics:

PHP 5.6

PHP 7.0

PHP 7.1

Summary storage diagram (by frameworks)

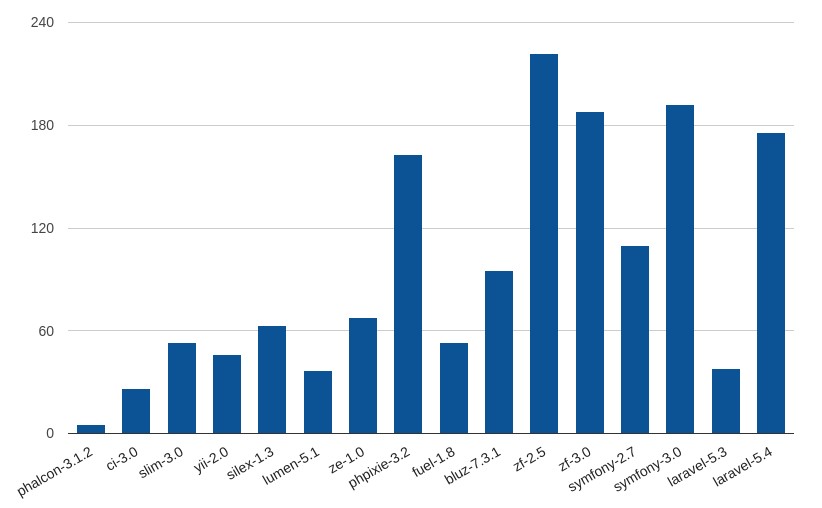

Included files

This characteristic is responsible for the amount of included files that are described in the file “entrance point” of the framework. It is clear that the system spends some time for searching and connecting. Therefore, the less the number of files, the faster the application will be launched for the first time. Usually, next time the framework works with the cache which speeds up the work:

| Framework | Included files |

| phalcon-3.1.2 | 5 |

| ci-3.0 | 26 |

| slim-3.0 | 53 |

| yii-2.0 | 46 |

| silex-1.3 | 63 |

| lumen-5.1 | 37 |

| ze-1.0 | 68 |

| phpixie-3.2 | 163 |

| fuel-1.8 | 53 |

| bluz-7.3.1 | 95 |

| zf-2.5 | 222 |

| zf-3.0 | 188 |

| symfony-2.7 | 110 |

| symfony-3.0 | 192 |

| laravel-5.3 | 38 |

| laravel-5.4 | 176 |

The difference between Laravel 5.3 and Laravel 5.4 in the amount of included files may seem strange and give rise to discussions and disputes. We hasten to clarify the situation. As you know, thanks to the team — Php artisan optimize — force.

In Laravel 5.3, you can generate the file compiled.php, and by that it will reduce the number of connected files by collecting them into one. But there is one exception—the team is no longer there for generating this file in Laravel 5.4. A developer decided to remove this feature because thinks that it is right to use opcache for performance tuning.

Is it worth it to be updated?

Consolidated data by versions amply demonstrates what performance boost and efficiency in the resource utilization will occur in the transition (or the initial choice) to the new version of PHP.

In the transition from PHP 5.6 to PHP 7.0, the average performance boost was almost + 90%, herewith the minimum performance boost is + 33% for Laravel 5.3, and the maximum is > 200% for Zend Framework 2.5.

The transition from version 7.0 to 7.1 is not so shocking regarding numbers, but, on the average, it gives almost 20% of performance boost.

By summarizing all of the data obtained in the context of the performance of various versions of PHP, we get the following “mattresses”:

A funny fact: Laravel 5.3 showed the smallest performance boost when migrating from PHP 5.6 to PHP 7.0, but herewith it showed the greatest increase when migrating from the version 7.0 to the version 7.1, and as a result, the performance of Laravel 5.3 and 5.4 on PHP 7.1 is almost the same.

Memory consumption was also optimized, so the transition from PHP 5.6 to PHP 7.0 will allow your application to consume 30% less memory.

The update from the version 7.0 to the version 7.1 practically does not represent an increase and, as for the latter Symfony and Laravel, it goes in the “minus” column and they start to “eat” a little more.

We still have to look at the execution time, and yes, everything is okay here also:

- Moving from PHP 5.6 to PHP 7.0 will give you acceleration on average by 44%

- Moving from PHP 7.0 to PHP 7.1 will give you another acceleration by 14%

Well, here are some more “mattresses” for illustrative purposes:

Note: Testing by means of ab—what we are dealing with

“And as for slim and phpixie”—this question prompted us to the investigation of the behavior of the ab utility when interacting with these frameworks.

Let’s fulfill the test separately for Slim-3.0:

ab -c 10 -t 3 http://localhost/php-framework-benchmark/laravel-5.4/public/index.php/hello/index

Concurrency Level: 10

Time taken for tests: 5.005 seconds

Complete requests: 2

Failed requests: 0

Total transferred: 1800 bytes

HTML transferred: 330 bytes

Requests per second: 0.40 [#/sec] (mean)

Time per request: 25024.485 [ms] (mean)

Time per request: 2502.448 [ms] (mean, across all concurrent requests)

Transfer rate: 0.35 [Kbytes/sec] received

Something went wrong, the number of requests per second is only 0.4 (!)

ab -c 10 -t 3 http://localhost/php-framework-benchmark/laravel-5.4/public/index.php/hello/index

Concurrency Level: 10

Time taken for tests: 3.004 seconds

Complete requests: 1961

Failed requests: 0

Total transferred: 1995682 bytes

HTML transferred: 66708 bytes

Requests per second: 652.86 [#/sec] (mean)

Time per request: 15.317 [ms] (mean)

Time per request: 1.532 [ms] (mean, across all concurrent requests)

Transfer rate: 648.83 [Kbytes/sec] received

The case was hidden in Keep Alive connection, you can find out more about it here.

When you make requests with “Connection: keep-alive” the subsequent request to the server will use the same TCP connection. This is called HTTP persistent connection. This helps in reducing CPU load on server side and improves latency/response time.

If a request is made with “Connection: close” this indicates that once the request has been made, the server needs to close the connection. And so for each request, a new TCP connection will be established.

By default, HTTP 1.1 client/server uses keep-alive whereas HTTP 1.0 client/server does not support keep-alive by default.

Thus, the test for Slim should look like this:

ab -H ‘Connection: close’ -c 10 -t 3 http://localhost/php-framework-benchmark/slim-3.0/index.php/hello/index

Concurrency Level: 10

Time taken for tests: 3.000 seconds

Complete requests: 10709

Failed requests: 0

Total transferred: 2131091 bytes

HTML transferred: 353397 bytes

Requests per second: 3569.53 [#/sec] (mean)

Time per request: 2.801 [ms] (mean)

Time per request: 0.280 [ms] (mean, across all concurrent requests)

Transfer rate: 693.69 [Kbytes/sec] received

Conclusion

This is to be expected—the unconditional leader in performance (but not in the development speed) is Phalcon. The second place, but, in fact, the first place among PHP frameworks (but not C, on which the source code Phalcon is written) is CodeIgniter 3!

Of course, do not forget that each tool has its own purpose. If you choose a small and easy framework and you are going to write something different from the simplest applications or REST API using it, then you will probably deal with the problems when expanding the functionality, and vice versa—the redundancy of full-featured, large frameworks will entail financial costs for hosting content, even for elementary applications under heavy load.

This testing was aimed at convincing/telling/strengthening the position of PHP language of the versions 7.0 and 7.1 in your mind and, as for future projects, introducing information that the performance is really increased with the help of tables and graphs.

Refactoring of internal data structures and adding an additional stage before code compilation in the form of an abstract syntax tree—Abstract Syntax Tree (AST)—resulted in superior performance and more efficient memory allocation. The numbers themselves look promising—the tests performed on real applications showed that PHP 7.0 is on average twice as fast as PHP 5.6, and it also uses 50% less memory during requests processing which makes PHP 7.0 a strong contender for HHVM JIT compiler from Facebook.

The tests fully confirm both the speedy processing of the request from PHP 7.0 and the reduced amount of memory used.